I could get the Cairo tiling packing irregular pentagons in p4g ( http://milotorda.net/index.php/cairo-pentagonal-tiling/ ) but I could get it by packing irregular pentagons in p4. The output density is 0.999999963353343.

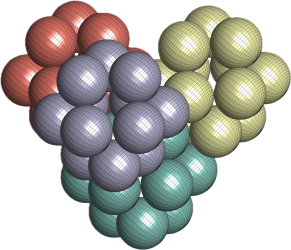

Left: Found configuration; Right: Zoom of the configuration